AI Models & Datasets

Leading AI Research & Development

TensorLabs AI maintains a comprehensive portfolio on Hugging Face, establishing ourselves as a major contributor to the open-source AI ecosystem. Our presence includes cutting-edge models, curated datasets, and innovative research that drives the industry forward.

Featured AI Models

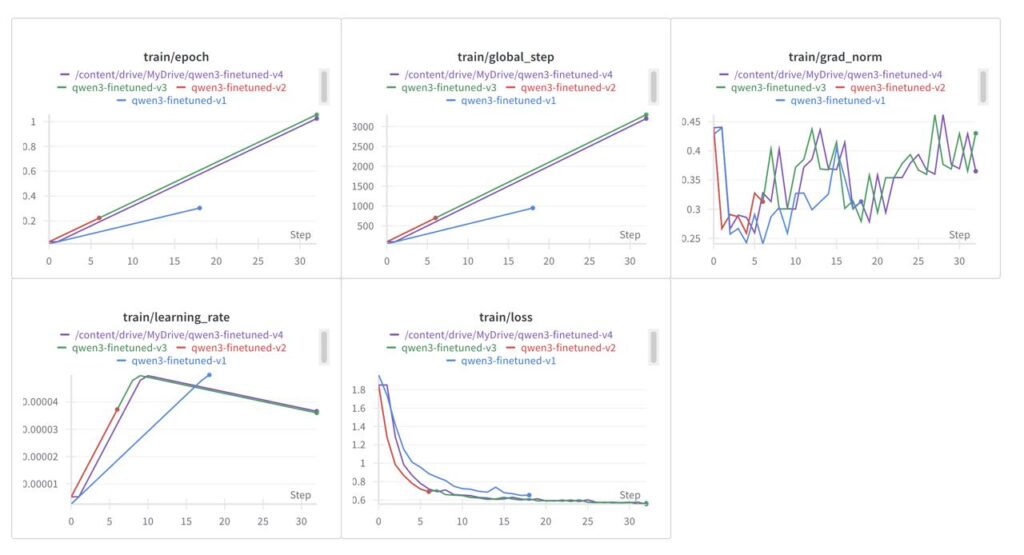

Architecture: Fine-tuned from Qwen3-4B Base Model

Performance: Optimized for web-based AI operating systems

Quantizations: 2 quantized versions available for different deployment scenarios

Use Cases: Specialized for Julia OS integration and system-level AI tasks

Premium Datasets

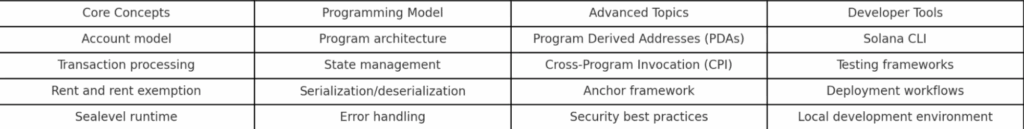

We created a dataset for our custom Solana developer model. This dataset consists of 25,000 question-answer pairs, generated in 5,000-sized batches using a rotating set of templates.

We based the content on key sources such as the official Solana documentation, Solana Cookbook, Anchor docs, SPL references, blog posts, and GitHub repositories.

Templates

To ensure variety and depth, we used seven distinct templates:

- Basic, Code-Focused, Conceptual, and Edge Cases for general QA coverage

- Anchor, PDA, and CPI for domain-specific knowledge

Templates were rotated either sequentially for balanced coverage or randomly for natural variation.

Distribution

For optimal distribution, we use:

- 60% general templates (Basic 20%, Code 20%, Conceptual 10%, Edge Cases 10%)

- 40% specialized templates (Anchor 15%, PDA 15%, CPI 10%)

Quality

A quality control system rated each QA pair on relevance, correctness, completeness, clarity, and code quality to ensure a high standard across the dataset.

Research Impact & Community

- Model Downloads: 1000+ across our model portfolio

- Active Community: Growing research community and developer adoption

- Technical Innovation: Cutting-edge fine-tuning techniques and optimization

- Open Source Contributions: Regular model releases and dataset contributions

- Industry Recognition: Models used in production systems and research projects